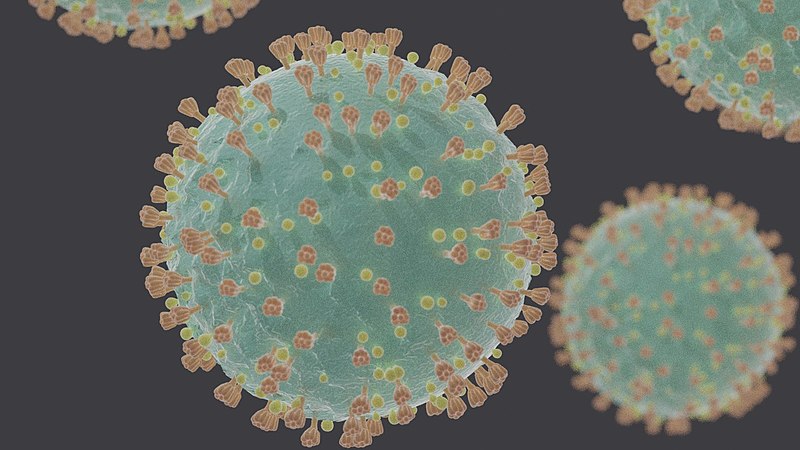

An illustration of the ultrastructural morphology exhibited by coronaviruses. A novel coronavirus has caused a global outbreak of respiratory illness that was first detected in Wuhan, China, in 2019.

Human and AI annotations aim to improve scholarly results in COVID-19 searches

Posted on April 22, 2020UNIVERSITY PARK, Pa. — To help scholars more quickly and accurately access scientific literature related to the novel coronavirus, two teams of researchers in Penn State’s College of Information Sciences and Technology are exploring how artificial-intelligence-based tools can help to provide instantaneous and up-to-date information about the rapidly evolving nature of the global pandemic.

Both projects analyze publications shared through COVIDSeer, a search engine that sorts through the COVID-19 Open Research Dataset — a free resource of tens of thousands of scholarly articles related to the novel coronavirus.

In the first project, Prasenjit Mitra, professor and associate dean for research in IST and an associate with Penn State’s Institute for Computational and Data Sciences (ICDS), is seeking to automatically construct answers to 10 of the most significant scientific questions identified by epidemiologists and infectious disease experts in response to a call to action from the White House Office of Science and Technology Policy.

The team is working to build a system that provides answers to open-ended queries by identifying the semantics, the type, and the quality of documented evidence in research papers.

The team will use supervised machine learning techniques to extract relevant information from papers, link the extracted information, and then automatically collate evidence found in different documents related to a topic. Then, they’ll crowdsource efforts to verify the accuracy of the extractors and the information’s relevance to the topic, ultimately resulting in a brief summary of what is known to help answer the ten questions with references to the original documents.

“Unfortunately, we can’t read all the papers, but we can extract information from scholarly research and automatically and quickly generate meaningful answers to these grand questions,” explained Mitra.

While the hope is to find answers, Mitra also expects to find questions identified by the data that need to be answered, such as whether certain symptoms are co-related to the coronavirus. The work could help to bridge the gap between scientists and policy makers.

“Information and knowledge can help us understand the pandemic better and institute better policy guidance at a governmental level,” said Mitra. “Instead of basing it on gut feelings and rules of thumb, the more we make these decisions on evidence and published knowledge, the better.”

Separately, Kenneth Huang, assistant professor of IST, will work with non-expert crowd workers and volunteers to provide useful annotations to the thousands of scientific papers in the CORD-19 dataset. By annotating different aspects in each paper, such as background, purpose, method and contribution, Huang aims to understand more about the paper’s structure and, therefore, potentially provide better information to experts.

“Not all papers in the CORD-19 dataset are directly relevant to the currently spreading novel coronavirus,” said Huang. “Annotating these documents allows us to better index them and make it easier to find useful information, enabling faster responses to the new disease outbreak.”

Annotators will also seek to identify details – such as where and when the research was conducted – that could potentially provide insight into critical details about how the virus spread or why certain policy decisions were made.

Explained Huang, “Human annotation is the battery of artificial intelligence and machine learning. While expert annotation is ideal if you have time, the pandemic is accelerating too rapidly to wait.”

Instead, Huang believes that laypeople can understand the structures of scientific articles and identify different types of information within them. Huang’s team will evaluate the accuracy of these non-expert annotations.

“If we could verify that an average crowd worker can accurately annotate information more than, say, 80% of the time, we will be able to annotate thousands of papers in a week or two without a big drop in quality from what we’d find in an expert,” said Huang.

Both projects have received initial funding through the Coronavirus Research Seed Fund, an initiative launched by the Huck Institute of the Life Sciences that uses Penn State’s unique research strengths to contribute to the global coronavirus response. This funding was provided with support from the Institute for Computational and Data Science.

C. Lee Giles, David Reese Professor of Information Sciences and Technology and an ICDS associate, developed COVIDseer and serves as a co-investigator on both projects.

— Published on Penn State News

Share

Related Posts

- Girls, economically disadvantaged less likely to get parental urging to study computers

- Former IST doctoral student oversees software development and research at Adobe

- Wastewater sampling may give advanced warning of potential COVID-19 outbreaks

- Penn State’s supercomputer takes on COVID-19 — and its aftermath